In the relentlessly escalating world of data centers and high-performance computing (HPC), the challenge of effectively managing heat has morphed from a mere operational hurdle into a monumental constraint on growth, efficiency, and sustainability. As processor power skyrockets and rack densities soar, traditional air-cooling methods are rapidly reaching their thermal limits, demanding innovative solutions. This is where liquid cooling emerges as a transformative technology, offering an unparalleled server efficiency boost. For technology professionals, data center operators, and anyone invested in the future of digital infrastructure, understanding the profound impact and multifaceted benefits of liquid cooling is paramount. Its rise signals a critical shift in how we build, power, and cool our digital backbone, making it a highly relevant topic for SEO and a strong draw for high CPC Google AdSense revenue through in-depth, authoritative content. This comprehensive article will delve deep into the mechanics, advantages, and future trajectory of liquid cooling, illustrating why it’s not just an alternative, but an essential evolution in data center design.

The Intensifying Heat Challenge in Data Centers

Modern data centers are, at their core, massive heat generators. Every silicon chip, every spinning drive, and every power supply converts electrical energy into processing power, but an unavoidable byproduct of this conversion is heat. As computing demands surge—driven by AI, machine learning, big data analytics, and cloud services—the density of computing power within each server rack has increased exponentially. This concentration of heat within a confined space creates a formidable thermal management problem.

Traditional air cooling, which relies on Computer Room Air Conditioners (CRACs) or Computer Room Air Handlers (CRAHs) to circulate chilled air through perforated floor tiles and around hot equipment, faces inherent limitations:

- Inefficiency at High Densities: Air is a poor conductor of heat compared to liquids. At high rack densities (e.g., above 15-20 kW per rack), air simply cannot remove heat fast enough, leading to hot spots and equipment performance degradation or failure.

- High Energy Consumption: Moving vast volumes of air requires significant energy, contributing substantially to a data center’s Power Usage Effectiveness (PUE). Fans in servers, CRAC/CRAH units, and raised floor designs all consume considerable power.

- Footprint and Layout Constraints: Airflow management requires strict hot/cold aisle containment, raised floors, and sufficient space, which can be restrictive for layout flexibility and costly in terms of real estate.

- Environmental Impact: The energy consumption of air-cooled data centers contributes to high carbon emissions, making them less sustainable.

As these limitations become more pronounced, the industry’s pivot towards liquid cooling solutions is becoming less of a niche luxury and more of an operational necessity.

What is Liquid Cooling? Redefining Thermal Management

At its essence, liquid cooling involves using a fluid, rather than air, as the primary medium to transfer heat away from IT components. Liquids have a significantly higher thermal conductivity and specific heat capacity than air, meaning they can absorb and transfer much more heat far more efficiently in a much smaller volume. This fundamental advantage allows for dramatically higher power densities within server racks and substantially greater cooling efficiency.

Liquid cooling is broadly categorized into several distinct types, each with its unique characteristics, deployment methods, and advantages.

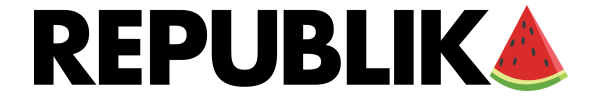

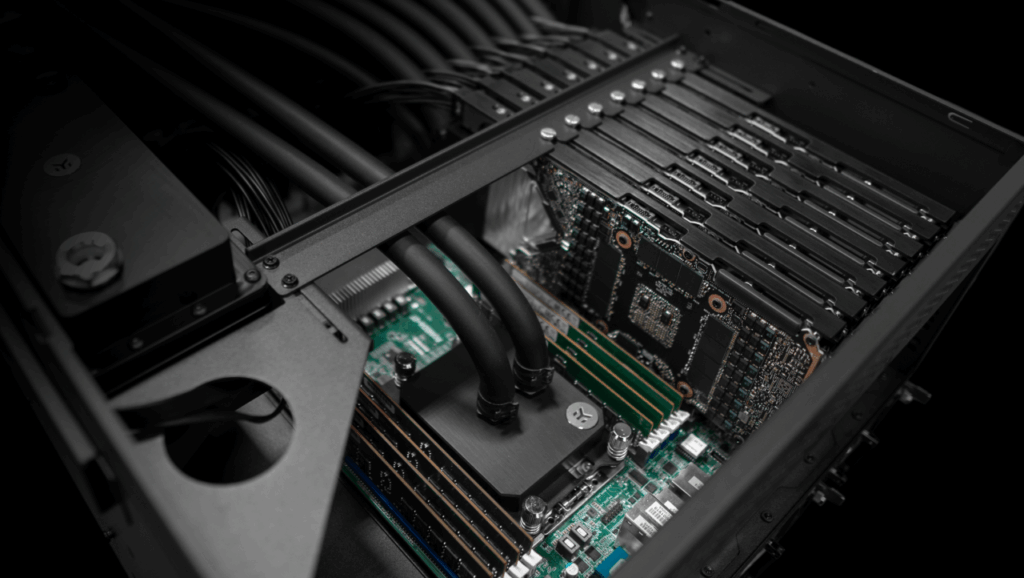

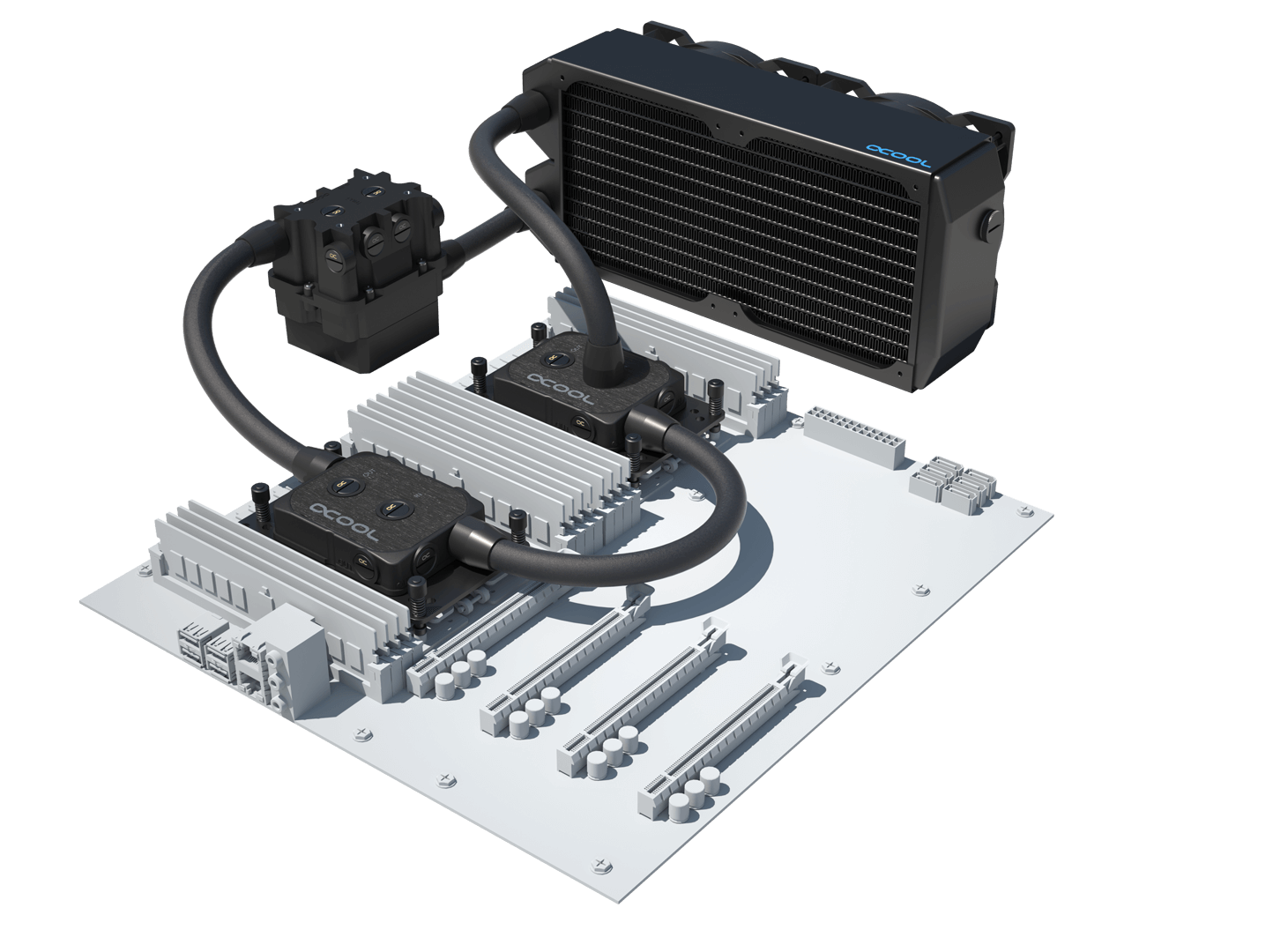

A. Direct-to-Chip (Cold Plate) Cooling

This is a widely adopted and often hybrid approach. In direct-to-chip cooling, specialized cold plates are mounted directly onto the hottest components of a server, typically the CPUs and GPUs. A dielectric fluid (non-electrically conductive) or treated water (often with glycol) circulates through these cold plates, absorbing heat directly from the chip.

- Mechanism: Cool liquid enters the cold plate, flows across the hot surface of the chip, absorbs heat, and then exits as warmer liquid to a manifold. From the manifold, the warmed liquid is transported to an external heat exchanger (CDU – Cooling Distribution Unit) where it rejects its heat, usually to a facility water loop or atmosphere, before being recirculated.

- Advantages:

- Highly Targeted: Removes heat directly from the source, making it extremely efficient for the hottest components.

- Reduced Server Fan Usage: Can significantly reduce or eliminate the need for server-level fans, saving power and reducing noise.

- Retrofit Potential: Often integrates with existing air-cooled environments, as the remaining server components (RAM, storage) can still be air-cooled. This makes it a popular first step into liquid cooling.

- Lower PUE: Substantially improves Power Usage Effectiveness by reducing cooling energy.

- Considerations: Still requires some air cooling for other components unless fully integrated. Requires specialized cold plate integration with server hardware.

B. Immersion Cooling

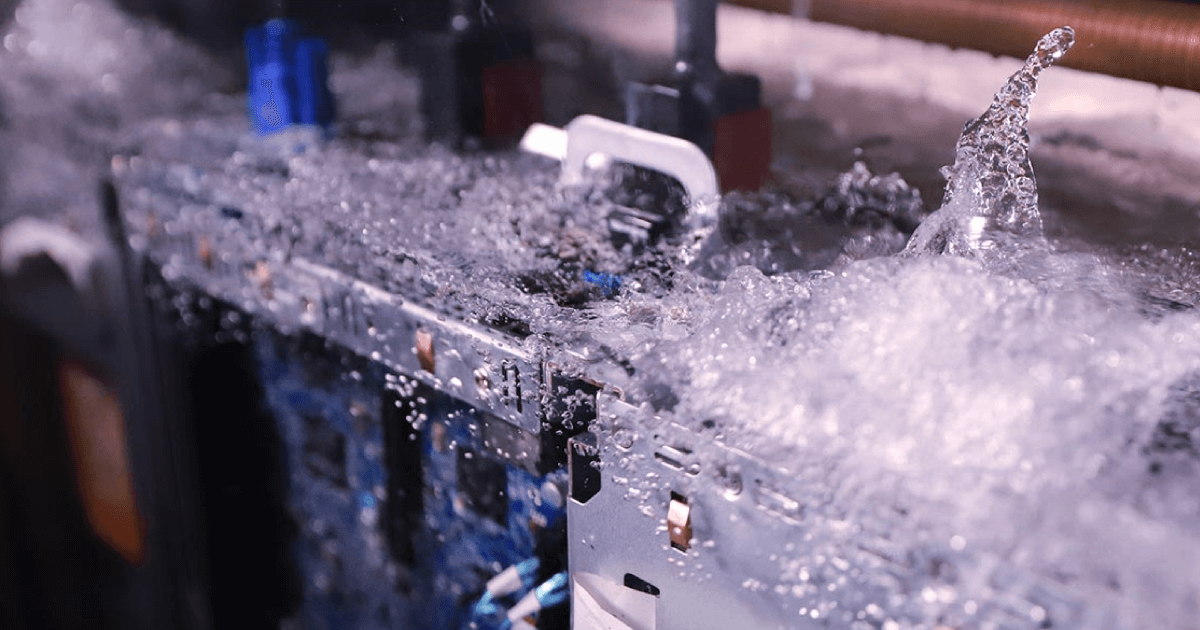

This represents a more radical departure from traditional cooling, where IT equipment is fully submerged into a non-conductive dielectric fluid. There are two primary types of immersion cooling:

- Single-Phase Immersion Cooling:

- Mechanism: Server components are immersed in a fluid that remains in its liquid state. The heat absorbed by the fluid is then transferred to a heat exchanger within the tank or circulated to an external CDU, similar to cold plate cooling. The fluid never changes phase.

- Advantages:

- Maximum Density: Supports extremely high rack densities, far beyond what air cooling or even cold plate can handle.

- Eliminates All Server Fans: No moving parts within the tank, leading to silent operation and zero fan power consumption.

- Improved Component Lifespan: Components are protected from dust, humidity, and thermal cycling, potentially extending their operational life.

- Highest PUE Efficiency: Can achieve PUEs close to 1.0, meaning almost all energy goes directly to computing.

- Considerations: Requires specialized infrastructure (tanks, pumps, filtration). Access to submerged components requires specialized tools or procedures. Fluid management and maintenance are unique.

- Two-Phase Immersion Cooling:

- Mechanism: Servers are immersed in a dielectric fluid that has a very low boiling point. As the fluid absorbs heat from the components, it boils and transforms into a gas (vapor). This vapor rises, condenses back into liquid on a cold coil at the top of the tank, and then drips back down onto the components, creating a continuous, highly efficient passive cooling cycle.

- Advantages:

- Extremely Efficient Heat Transfer: Phase change (boiling and condensing) is an exceptionally effective way to transfer large amounts of heat with minimal energy input (often passive).

- No Pumps Required (often): The natural boiling/condensing cycle can eliminate the need for pumps within the tank, simplifying the system.

- Ultra-High Density Support: Ideal for the most demanding HPC and AI workloads.

- Considerations: Fluids are typically more expensive than single-phase fluids. Requires careful sealing to prevent fluid evaporation. Similar access challenges to single-phase immersion.

C. Rear Door Heat Exchangers (RDHX)

While not direct liquid cooling of components, RDHXs are a hybrid liquid-air solution that integrate liquid into the rack itself to capture heat closer to the source.

- Mechanism: A passive or active coil filled with chilled liquid is installed as the rear door of a server rack. Hot air exiting the servers passes through this coil, where heat is transferred to the liquid, and then cooled air is returned to the data center aisle.

- Advantages:

- Rack-Level Heat Capture: Removes up to 100% of the heat from a rack before it enters the data center environment.

- Improved Data Center Airflow: Reduces hot spots and improves overall data center cooling efficiency, often eliminating the need for hot aisle containment.

- Retrofit Friendly: Relatively easy to integrate into existing data center infrastructure without significant changes to servers.

- Considerations: Still relies on server fans to move air through the rack. Max density is lower than immersion cooling.

Unpacking the Benefits: Why Liquid Cooling is the Future

The adoption of liquid cooling is driven by compelling advantages that address the most pressing concerns in modern data center operations.

A. Unprecedented Energy Efficiency

This is arguably the most significant driver. By replacing inefficient air with highly conductive liquids, data centers can dramatically reduce their cooling energy consumption.

- Lower PUE: Liquid cooling systems routinely achieve PUE (Power Usage Effectiveness) values significantly lower than air-cooled facilities. While typical air-cooled data centers have PUEs between 1.5 and 2.0 (meaning 50-100% more energy used for cooling than computing), liquid-cooled facilities can achieve PUEs as low as 1.03-1.15, leading to massive energy savings.

- Reduced Fan Power: Eliminating or significantly reducing the need for server fans and large CRAC/CRAH units directly translates to substantial power savings.

- Free Cooling Opportunities: The higher heat rejection temperatures possible with liquid cooling (e.g., 40-50°C liquid temperatures) mean that data centers can utilize outside ambient air for “free cooling” for a much larger portion of the year, even in warmer climates, bypassing the need for energy-intensive chillers.

B. Higher Rack Density and Compute Capacity

Liquid cooling liberates data centers from the thermal limitations of air, enabling a new era of ultra-dense computing.

- Packing More Power: Data centers can deploy significantly more powerful processors and servers within the same physical footprint. Racks that might handle 10-15 kW with air cooling can support 50 kW, 100 kW, or even more with liquid cooling.

- Space Optimization: This density increase means less physical space is required for the same amount of compute power, leading to reduced real estate costs in valuable urban areas.

- Supporting Next-Gen Hardware: Critical for deploying cutting-edge AI accelerators, GPUs, and high-core-count CPUs that generate immense heat.

C. Enhanced Performance and Reliability

Cooler components operate more reliably and can perform at peak efficiency.

- Elimination of Hot Spots: Liquid cooling directly removes heat from the hottest components, preventing localized hot spots that can degrade performance and lead to early component failure.

- Stable Operating Temperatures: Maintains components at more consistent and optimal temperatures, reducing thermal stress and extending hardware lifespan.

- Overclocking Potential: In some high-performance scenarios, the superior cooling allows for higher clock speeds and sustained peak performance without throttling.

- Reduced Dust and Contaminants: Especially in immersion cooling, components are sealed from dust, humidity, and other environmental contaminants, further improving reliability.

D. Environmental and Sustainability Advantages

Liquid cooling aligns perfectly with the growing imperative for green data centers.

- Lower Carbon Footprint: Direct energy savings translate directly into reduced greenhouse gas emissions.

- Reduced Water Usage: Many liquid cooling systems are closed-loop, or reject heat via dry coolers, significantly reducing or eliminating the massive water consumption typically associated with evaporative cooling towers in air-cooled facilities. This is crucial in water-stressed regions.

- Heat Reuse Potential: The higher temperature waste heat from liquid cooling (e.g., 40-60°C) is more valuable and easier to capture for reuse. This “waste heat” can be repurposed for building heating, district heating networks, or even agricultural applications, creating a truly circular energy economy.

E. Quieter Operations

Eliminating numerous server fans and large CRAC units results in significantly quieter data center environments, improving working conditions for staff.

Challenges and Considerations for Adoption

Despite its compelling benefits, the transition to liquid cooling is not without its challenges and requires careful planning.

A. Higher Initial Capital Expenditure

The upfront cost for liquid cooling infrastructure (CDUs, manifolds, cold plates, immersion tanks, dielectric fluids) can be higher than traditional air cooling components. However, this is often offset by long-term operational savings and higher compute density.

B. Plumbing and Leak Concerns

The introduction of liquids into the IT environment raises natural concerns about leaks. Modern liquid cooling systems are designed with redundancy, leak detection, and robust materials, but meticulous installation and maintenance protocols are essential.

C. Interoperability and Standardization

The industry is still working towards universal standards for liquid cooling interfaces, components, and fluids, which can sometimes lead to vendor lock-in or integration complexities.

D. Skill Set and Training

Data center staff require new skill sets and training for installing, maintaining, and troubleshooting liquid cooling infrastructure. This includes handling fluids, managing plumbing, and understanding specialized equipment.

E. Server Hardware Compatibility

While increasing, not all server hardware is designed for liquid cooling. Adapting existing air-cooled servers to liquid cooling (e.g., by adding cold plates) can be complex and requires specific vendor solutions.

The Future Trajectory of Liquid Cooling

The trajectory of liquid cooling is undeniably upward, driven by market forces, technological advancements, and regulatory pressures.

A. Hybrid Deployments as the Norm

Many data centers will continue to adopt a hybrid approach, using liquid cooling for high-density racks and air cooling for less demanding equipment. Rear Door Heat Exchangers and direct-to-chip solutions will remain popular for phased transitions.

B. Increasing Adoption of Immersion Cooling

As HPC, AI, and edge computing demands grow, the unparalleled efficiency and density of single-phase and two-phase immersion cooling will become increasingly attractive. Specialized hardware for immersion will become more common.

C. Standardisation and Ecosystem Maturation

Industry bodies are actively working on standardization (e.g., Open Compute Project’s Open Rack and OCP Cooling efforts) for liquid cooling components, interfaces, and fluids. This will reduce complexity, lower costs, and accelerate adoption.

D. Edge Computing and Modular Data Centers

Liquid cooling’s compact footprint and ability to operate in diverse environments make it ideal for edge computing deployments, where space is limited and traditional cooling might be impractical. It also supports highly efficient modular data center designs.

E. Heat Reuse and Sustainability Mandates

Driven by corporate sustainability goals and potentially future regulations, the focus on capturing and reusing waste heat from liquid cooling will intensify, transforming data centers into energy sources rather than just energy consumers. This will contribute significantly to global decarbonization efforts.

F. Advancements in Dielectric Fluids

Ongoing research and development are leading to more efficient, safer, and environmentally friendly dielectric fluids with improved thermal properties and lower global warming potential.

G. Integration with Renewable Energy Sources

The inherent efficiency of liquid cooling makes it an ideal partner for renewable energy sources. Coupling a highly efficient liquid-cooled data center with solar, wind, or geothermal power creates a truly sustainable and resilient digital infrastructure.

Case Studies: Real-World Impact

Numerous companies and research institutions are already leveraging liquid cooling to achieve groundbreaking results:

- Supercomputing Centers: Many of the world’s fastest supercomputers are entirely liquid-cooled, demonstrating the technology’s capability to handle extreme heat loads for cutting-edge scientific research.

- Hyperscale Cloud Providers: Large cloud providers are increasingly deploying liquid cooling in segments of their data centers to achieve higher densities and lower operational costs for their most demanding services (e.g., AI inference).

- Edge Data Centers: Smaller, modular liquid-cooled units are being deployed closer to end-users to support low-latency applications, showcasing the versatility of the technology in diverse environments.

- Enterprise Data Centers: Traditional enterprises are adopting liquid cooling for specific high-density applications, reducing their data center footprint and energy bills without a complete overhaul.

These real-world examples underscore that liquid cooling is no longer a futuristic concept but a proven technology delivering tangible benefits today.

A Cooler, Greener, More Powerful Future

The trajectory of the digital world demands ever-increasing compute power, and with it, the challenge of managing heat. Liquid cooling offers a compelling, efficient, and sustainable answer to this fundamental problem. By harnessing the superior thermal properties of fluids, data centers can achieve unprecedented levels of energy efficiency, pack more processing power into smaller footprints, enhance the reliability and performance of critical IT infrastructure, and significantly reduce their environmental impact. While the transition presents initial investments and learning curves, the long-term operational savings, the ability to support next-generation workloads, and the imperative for sustainability make liquid cooling an undeniable cornerstone of future data center design. It’s not just about keeping servers cool; it’s about enabling the next wave of technological innovation, building a more energy-efficient digital backbone, and paving the way for a greener, more powerful tomorrow. Embracing these advanced thermal management strategies is crucial for any organization aiming to stay at the forefront of technological advancement and optimize its operational footprint.